Speaking of Perl modules that work -> Net::PubSubHubbub::Publisher

The other day I mentioned that I was looking at [PubSubHubbub http://code.google.com/p/pubsubhubbub/] (PuSH). I was inspired by this post on [Rogers Cadenhead's workbench http://workbench.cadenhead.org/news/3560/pubsubhubbub-lot-easier-than-sounds]. Rogers lays PuSH publishing out in a few steps. Basically, add some XML to your RSS feed and ping a hub when you publish. I made the needed changes to my blosxom 2.1.1 cgi code, down near the end: /rss head <?xml version="1.0" encoding="$blog_encoding"?>/ */rss head <rss version="2.0" xmlns:atom="http://www.w3.org/2005/Atom">/* /rss head <channel>/ /rss head <title>$blog_title</title>/ */rss head <atom:link href="http://pwizardry.com/devlog/index.cgi/index.rss" rel="self" type="application/rss+xml" />/* */rss head <atom:link rel="hub" href="http://pubsubhubbub.appspot.com" />/* (*Bold* lines are the inserted changes.) Then I installed the Perl module [Net::PubSubHubbub::Publisher http://search.cpan.org/~bradfitz/Net-PubSubHubbub-Publisher-0.91/] and hacked together this quick program: /#! /usr/bin/perl/ /use Net::PubSubHubbub::Publisher;/ /use strict;/ /use warnings;/ /my $hub = "http://pubsubhubbub.appspot.com";/ /my $topic_url = "http://pwizardry.com/devlog/index.cgi/index.rss";/ /my $pub = Net::PubSubHubbub::Publisher->new( hub => $hub);/ /$pub->publish_update( $topic_url ) or die "Ping failed: " . $pub->last_response->status_line;/ /print $pub->last_response->status_line, "\n"; # I want to see it even if it didn't fail/ I then went to FeedBurner and added my feed and subscribed to it in Google Reader. I was about to say "Updates show up in Google Reader in seconds" except this one didn't. I'm not sure why but I did find this post [...Get with it, PuSH, http://www.educer.org/2009/09/29/on-pubsubhubbub-part-2-get-with-it-push-youre-supposed-to-be-realtime/] hmmm... [/items/Perl] permanent link

local::lib for local Perl module installs -- it made my day

I recently moved this site to a new ISP and had to install some Perl modules that aren't provided on the server. In my case I also needed to install [Catalyst http://www.catalystframework.org/] which includes dozens of modules and a ton of dependencies. This is a common task for any Perl programmer who doesn't have root or who just wants to install some modules outside the global locations. It's also a common Perl headache. There are plenty of sites on the web that try to tell you how to do this but hardly any mention [local::lib http://search.cpan.org/~apeiron/local-lib/] yet. local::lib has been around a couple of years now and I'm glad I found it. I just followed the instructions for the bootstrapping technique. Download and unpack the local::lib tarball from CPAN as usual and then: perl Makefile.PL --bootstrap make test && make install perl -I$HOME/perl5/lib/perl5 -Mlocal::lib >> ~/.cshrc Works great! [/items/Perl] permanent link

Mon, 21 Sep 2009

just a test, nothing to see here...

[/items/Perl] permanent link

Thu, 26 Mar 2009

What's happening with me?

Last week I released a major update to my Perl module Geo::ReadGRIB on the CPAN (the official source of Perl extensions and the main reason you get so many hits when you Google Frank Lyon Cox). I've just started on a long simmering project to replace the Java applet on my website (which I wrote in 2000: [http://www.pwizardry.com/buoyBrowser/] ), with something more modern. Flash or Flex is what I'm thinking. I also want to make some interactive animated weather forecast maps from GRIB data as a demo of my module. This means learning Actionscript which used to make me hold my nose, but they are up to version3 now and it looks, and smells, a lot better. My wife is a very successful illustrator and is making some art for a game project which is using flash. Coincidently, they are looking for an expert Actionscript programmer. It's possible I'll be able to contribute something to that project if I get my butt in gear. I any case, this points to possible future family business ideas. I've also been involved in some major home improvement projects. It's been fun! I am looking for a job though. [/items/Perl] permanent link

Wed, 18 Oct 2006

Good article on automation of Perl module deployment:

[From IBM developer works http://www-128.ibm.com/developerworks/library/l-depperl.html?ca=drs-l1105] It used a combination of the CPAN module and some scripts [/items/Perl] permanent link

Sat, 15 Jul 2006

Africa centric swell animation using Geo::ReadGRIB

/An animated GIF abstracted from a world wide Wavewatch III GRIB dataset/

/showing all the seas were swells bound for southern Africa might be generated/

/An animated GIF abstracted from a world wide Wavewatch III GRIB dataset/

/showing all the seas were swells bound for southern Africa might be generated/

- Cover jaggy edged landmass shadow areas with /hopefully/ smother map images.

- Create a key image that will map the colors used to data values. This will probably be a colorful ban along the top or bottom

- Tweak the data-to-color mappings to best show potentially surfable swells. This will be tricky...

Fri, 23 Jun 2006

Animated data visualization application using Geo::ReadGRIB

/An animated GIF of a world wide Wavewatch III GRIB dataset/

/An animated GIF of a world wide Wavewatch III GRIB dataset/

Performance boost in Geo::ReadGRIB .05

*New method extractLaLo()* I posted [Geo::ReadGRIB http://search.cpan.org/~frankcox/Geo-ReadGRIB-0.5/lib/Geo/ReadGRIB.pm] version .05 which includes a new method with the signature: */extractLaLo(data_type, lat1, long1, lat2, long2, time)/* This will extract all data for a given /data_type/ and /time/ in the rectangular area defined by (lat1, long1) and (lat2, long2) where lat1 >= lat2 and long1 <= long2. That is, lat1 is north or lat2 and long1 is west of long2. This new method takes advantage of the fact that it takes only one call to wgrib to extract all the locations in the GRIB file for a given type and time. The original extract() method was designed for extracting GRIB data for a single location. I'm working on a project now that will create animated surf whether charts for a large area of ocean. Getting the data is much faster using Geo::ReadGRIB .05. *How much faster?* I posted some informal benchmarks [earlier http://pwizardry.com/devlog/index.cgi/2006/06/04#DBM.and.ReadGRIB] . Here are some results using a similar test using a different data set and a larger number of points. extract() no hits: 0.14/s 2.44s/ extractLaLo() no hits: 4.74/s .21s/ This just runs an extract for about 22000 data points using each method. The "no hits" means the objects were fresh and all data had to come from the GRIB file. (Recall, ReadGRIB will cache data in memory and only go to the file if it needs to.) It took the version using extract() about 2.4 seconds per run while extractLaLo() took just .21. That's over 11 times faster. Of course poor extract() was designed for single point at a time extraction but everyone loves a winner. For me, it means the difference between a starch and a coffee break while running tests that need a lot of new GRIB data. [/items/Perl] permanent link

Sun, 04 Jun 2006

Performance experiments with Geo::ReadGRIB and DBM

/This is a screen shot of my ssh session (set to 2-point type) printing data extracted using/

/Geo::ReadGRIB from a worldwide data set in a GRIB file. Since it's marine data, locations over/

/land are UNDEF and I print '*'. For anything else I print a space. This code was originally/

/developed to test my data extraction methods. Common errors like one-off would give a/

/distorted map. I adapted this code for my performance tests./

/This is a screen shot of my ssh session (set to 2-point type) printing data extracted using/

/Geo::ReadGRIB from a worldwide data set in a GRIB file. Since it's marine data, locations over/

/land are UNDEF and I print '*'. For anything else I print a space. This code was originally/

/developed to test my data extraction methods. Common errors like one-off would give a/

/distorted map. I adapted this code for my performance tests./

Mon, 15 May 2006

Geo::ReadGRIB now on CPAN!!!!

At last. I've finally solved the technical issues in building my ReadGRIB module, written the documentation and done some multi platform tests... [Geo::ReadGRIB http://search.cpan.org/~frankcox/Geo-ReadGRIB-0.4/lib/Geo/ReadGRIB.pm] is now up on [CPAN http://cpan.org] [/items/Perl] permanent link

Sun, 23 Apr 2006

Not /exactly/ top_targets... But I was getting close

Really, it seems best not to mess with top_targets at all. I started looking for an empty section that, ideally, was a target anyway. /*dynamic*/ fit the bill. In fact, I had to stub it out with a comment to keep the make from erroring out with /"don't know how to make dynamic"/... I think it's running dynamic because it found wgrib.c -- even though I had dynamic in the SKIP section. Anyway, this works and the dist builds right on Linux FreeBSD and windows. This means I can stop messing with the make part and finish the distro for release. sub MY::dynamic { ' dynamic :: $(INST_ARCHAUTODIR)/wgrib.exe @$(NOOP) $(INST_ARCHAUTODIR)/wgrib.exe: $(C_FILES) --tab-- $(CC) -o wgrib.exe wgrib.c --tab-- $(MKPATH) $(INST_ARCHAUTODIR) --tab-- $(CP) wgrib.exe $(INST_ARCHAUTODIR) '; } [/items/Perl] permanent link

Sat, 01 Apr 2006

top_targets ... Now I'm starting to get it.

I've been letting the Geo::ReadGRIB project simmer along for a while now. I've worked on a few other projects in the mean time. I even started and finished one, Games::Suduku. The main thing I needed to do is find out enough about ExtUtils::MakeMaker and the make files it creates. This means digging into /make/ again too. Recall that I want to compile a third party C program which my module code wall then use. I can't find any examples doing this anywhere so I had to discover a good way myself. Looks like the top_targets section is the place to do the compile: (In lib-wgrib/) sub MY::top_targets { ' all :: static pure_all :: static static :: wgrib$(LIB_EXT) wgrib$(LIB_EXT): $(C_FILES) --tab-- $(CC) -o wgrib wgrib.c --tab-- $(MKPATH) $(INST_ARCHAUTODIR) --tab-- $(CP) wgrib $(INST_ARCHAUTODIR)/wgrib '; } [/items/Perl] permanent link

.c$(OBJ_EXT): $(CCCMD) $(CCCDLFLAGS) -I$(PERL_INC) $(DEFINE) $*.c # --- MakeMaker const_cccmd section: CCCMD = $(CC) -c $(INC) $(CCFLAGS) $(OPTIMIZE) \ $(PERLTYPE) $(MPOLLUTE) $(DEFINE_VERSION) \ $(XS_DEFINE_VERSION) CC: cc -c INC: CCFLAGS: -fno-strict-aliasing -I/usr/local/include OPTIMIZE: -O PERLTYPE: DEFINE_VERSION: -VERSION=\"0.10\" XS_DEFINE_VERSION: -DXS_VERSION=\"0.10\" -DPIC -fpic -/usr/local/lib/perl5/5.6.1/i386-freebsd/CORE wgrib.c [/items/Perl] permanent link

Mon, 13 Mar 2006

Not perfect but my lib is coming along...

My proposed name is Geo::ReadGRIB. I've been struggling with the makefiles for a while and I've kludged my way to a truce. Makes without error but compiles wgrib.c twice... It's good enough now to work on other parts of the project. *./Makefile.PL:* use 5.006; use ExtUtils::MakeMaker; # See lib/ExtUtils/MakeMaker.pm for details of how to influence # the contents of the Makefile that is written. WriteMakefile( NAME => 'Geo::ReadGRIB', VERSION_FROM => 'lib/Geo/ReadGRIB.pm', # finds $VERSION AUTHOR => 'NULL <lyon@vwh.net>', SKIP => 'qw[static static_lib dynamic dynamic_lib]', MYEXTLIB => 'lib-wgriblib$(LIB_EXT)/', ); sub MY::dynamic_lib { ' ARMAYBE = : OTHERLDFLAGS = INST_DYNAMIC_DEP = $(INST_DYNAMIC): $(OBJECT) $(MYEXTLIB) $(BOOTSTRAP) $(INST_ARCHAUTODIR)/.exists $(EXPORT_LIST) $(PERL_ARCHIVE) $(PERL_ARCHIVE_AFTER) $(INST_DYNAMIC_DEP) @echo "Make:MY::dynamic_lib not needed -- don\'t worry" '; } sub MY::static { ' static :: Makefile $(INST_STATIC) @echo "MY::static: not needed here -- go home" '; } sub MY::postamble { ' $(MYEXTLIB) : lib-wgrib/Makefile cd lib-wgrib && $(MAKE) $(PASSTHRU) '; } *lib-wgrib/Makefile.PL:* use 5.006; use ExtUtils::MakeMaker; WriteMakefile( NAME => 'Geo::ReadGRIB::lib-wgrib', SKIP => 'qw[all static static_lib dynamic dynamic_lib]', clean => {'FILES' => 'liblib-wgrib$(LIB_EXT) wgrib'}, ); sub MY::const_cccmd { ' CCCMD = $(CC) -o wgrib '; } sub MY::dynamic_lib { ' ARMAYBE = : OTHERLDFLAGS = INST_DYNAMIC_DEP = $(INST_DYNAMIC): $(OBJECT) $(MYEXTLIB) $(BOOTSTRAP) $(INST_ARCHAUTODIR)/.exists $( EXPORT_LIST) $(PERL_ARCHIVE) $(PERL_ARCHIVE_AFTER) $(INST_DYNAMIC_DEP) @$(NOOP) @echo "lib-wgrib/Make:MY::dynamic_lib not needed -- don\'t worry about thi s either" '; } sub MY::dynamic_bs { ' BOOTSTRAP = "*.bs" $(BOOTSTRAP): @echo "MY::dynamic_bs - bs, good name" $(INST_BOOT): @echo "MY::dynamic_bs - more of the same..." '; } [/items/Perl] permanent link

It's hard to come back from Mexico

A week in tropical Mexico can do a full reset on your systems. now... what was it I doing before? [/items/Perl] permanent link

Fri, 24 Feb 2006

More ReadGRIB: Makefile.PL hacking

I want to distribute a third party C program called wgrib.c with my module. The C code needs to be compiled on the target platform when the module is installed on the target platform. Then my Perl code will need to be able to use it. *this isn't done yet but so far...* I found some good hints from /perlXStut/

# First I added a directory named lib-wgrib/ and moved the C source there.

# Then I added this to the generated Makefile.PL:

WriteMakefile(

[...]

MYEXTLIB => 'lib-wgriblib$(LIB_EXT)/',

);

sub MY::postamble {

'

$(MYEXTLIB) : lib-wgrib/Makefile

cd lib-wgrib && $ (MAKE) $ (PASSTHRU)

';

}

# in lib-wgrib/ I created another Makefile.PL:

use 5.006;

use ExtUtils::MakeMaker;

WriteMakefile(

NAME => 'Geo::ReadGRIB::lib-wgrib',

SKIP => 'qw[all static static_lib dynamic dynamic_lib]',

clean => {'Files' => 'liblib-wgrib$(LIB_EXT)'},

);

sub MY::const_cccmd {

'

CCCMD = $(CC) and other stuf TBD...

';

}

The trick here is that the MY:: subroutines override the standard libs that write sections of the Makefile created. You can see the names of these subs in the comments in the Makefile.

Still working...

[/items/Perl]

permanent link

Thu, 16 Feb 2006

Work on my first CPAN contribution: ReadGRIB

For my first CPAN module I'm planing to offer ReadGRIB, a lib that will open NECP GRIB file. That's National Centers for Environmental Prediction GRIdded Binary files. They're used for scientific data. In this case, my module was crated to read marine whether prediction data. More on my project later. I'll say for now that it includes a perl .pm file which wraps a compiled C program called wgrib. I don't yet know how to package a perl module the includes C code used this way. That is, I will want to have the C code compiled to a stand alone program at module install time and then the perl .pm code will be able to use it. ... Just to remind myself: % /usr/local/bin/perl5.8.4 -I/usr/home/lyon/local/lib/site_perl /usr/local/bin/h2xs ... To run /h2xs/ with libs from my own include locations. [/items/Perl] permanent link

Tue, 07 Feb 2006

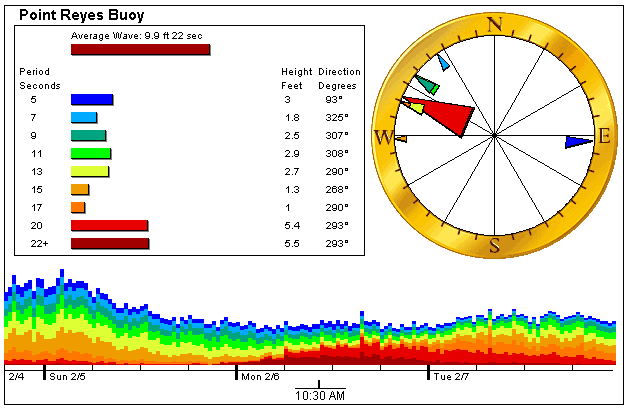

Powerful swell smashes my web site

They held the Mavericks surf contest today because of the powerful conditions. The swell made my buoyBrowser application stop working. It even hung one of my versions of Firefox. Hear's the error that was triggered by these rare conditions: /exception: java.lang.NumberFormatException: for input string "22+"/ My Java buoyBrowser application broke because an extremely long period swell was running yesterday. It turns out that the dominant period was over 22 seconds which is the longest period in the data files I download. The CDIP data files show this as "22+". It's rare to see even a foot of 22+ but it was up to 7 feet for that component. I took a screen shot shown below. I have the pointer on a time when the 22+ band is 5.5 feet and is the largest component. There were about 10 data points where this was true that day. I have back end software in Perl that scrapes the CDIP data files and precalculates everything for the Java app on my page. For one thing, it determines which swell component has the most energy. In this case it was 22+ which isn't a number. This is used in the 'Average Wave" display where the size is calculated combination of the size of all the individual components and the period is the period of the strongest component. In the six years this application has be running this is the first time 22+ has been dominant. Funny how many humbling lessons a fun little personal programming project can teach you. The fix? I now make sure no non numeric data gets into my data files. (s/[^0-9\s]//g)

[/items/Perl]

permanent link

[/items/Perl]

permanent link

Mon, 06 Feb 2006

I just got an account on PAUSE, the Perl Authors Upload Server

I'll be able to contribute Perl code to CPAN.org now. My CPAN id is FRANKCOX and I'll be adding some of my surf forecast stuff soon. Check out [PAUSE http://pause.perl.org] and [CPAN.org http://CPAN.org] for more info... [/items/Perl] permanent link

Fri, 03 Feb 2006

Perl Play: put on POE today

I installed POE and its /many/ dependencies tonight in ~/local/lib/site_perl and tested with a demo application from [Watching log files http://www.stonehenge.com/merlyn/PerlJournal/col01.html] The application /tails/ the web server access log and displays the results on a web page colorized by time. It's a way to spot clusters of hits. Really though it's an interesting demo more than anything. I hangs and dies after a while with the error: [= Error accept Too many open files (24) happened after Cleanup!] Cool so far... [/items/Perl] permanent link